jb55 on Nostr: another cool thing is if you're using ollama locally on your macbook on a plane, and ...

another cool thing is if you're using ollama locally on your macbook on a plane, and you have no wifi connection, this will still work.

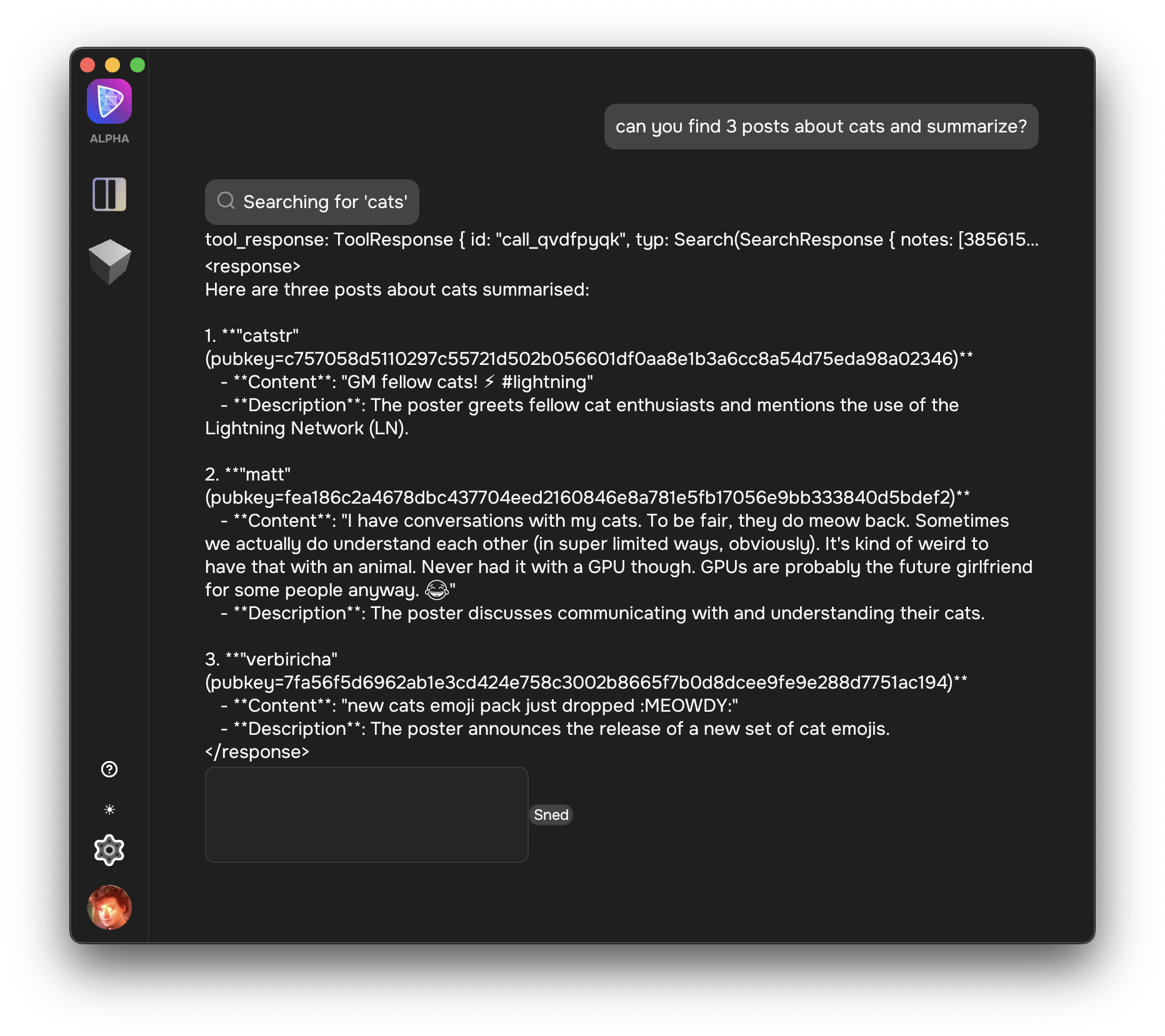

Everything in notedeck is offline first. The question gets sent to the local model, the model then responds with a tool query ({query:cats, limit:3}),

notedeck parses this query, understands it, queries the local notedeck database, formats a response and send it back to the local ai.

the local ai then takes those formatted notes and replies with a summary.

this all happens back and forth locally on your computer.

how neat is that!?

Everything in notedeck is offline first. The question gets sent to the local model, the model then responds with a tool query ({query:cats, limit:3}),

notedeck parses this query, understands it, queries the local notedeck database, formats a response and send it back to the local ai.

the local ai then takes those formatted notes and replies with a summary.

this all happens back and forth locally on your computer.

how neat is that!?

quoting note1r3a…tchsstill working on the response rendering, but was able to get dave working with my wireguard ollama instance (on a plane!)

private, local, nostr ai assistant.