openoms on Nostr: Anyone running multiple relays have compared their performance? Currently I am using ...

Anyone running multiple relays have compared their performance?

Currently I am using the #LNbits extension which adds a nice GUI to https://code.pobblelabs.org/nostr_relay/index (https://pypi.org/project/nostr-relay/)

It also readily available on the #Raspiblitz.

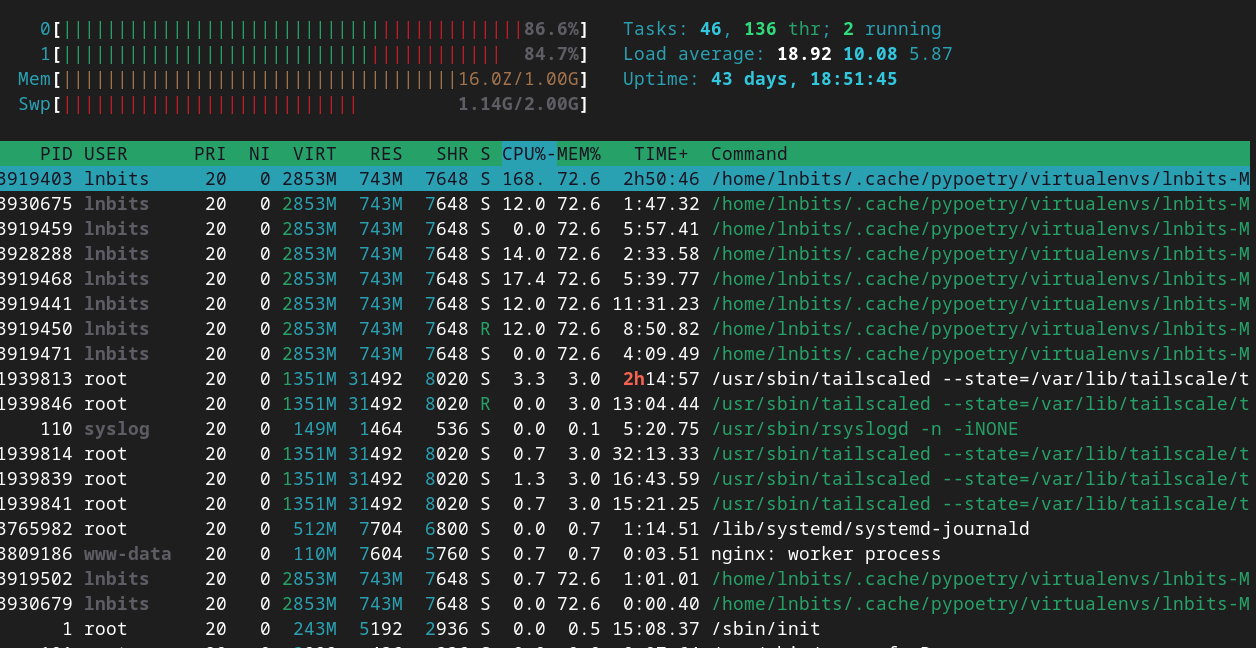

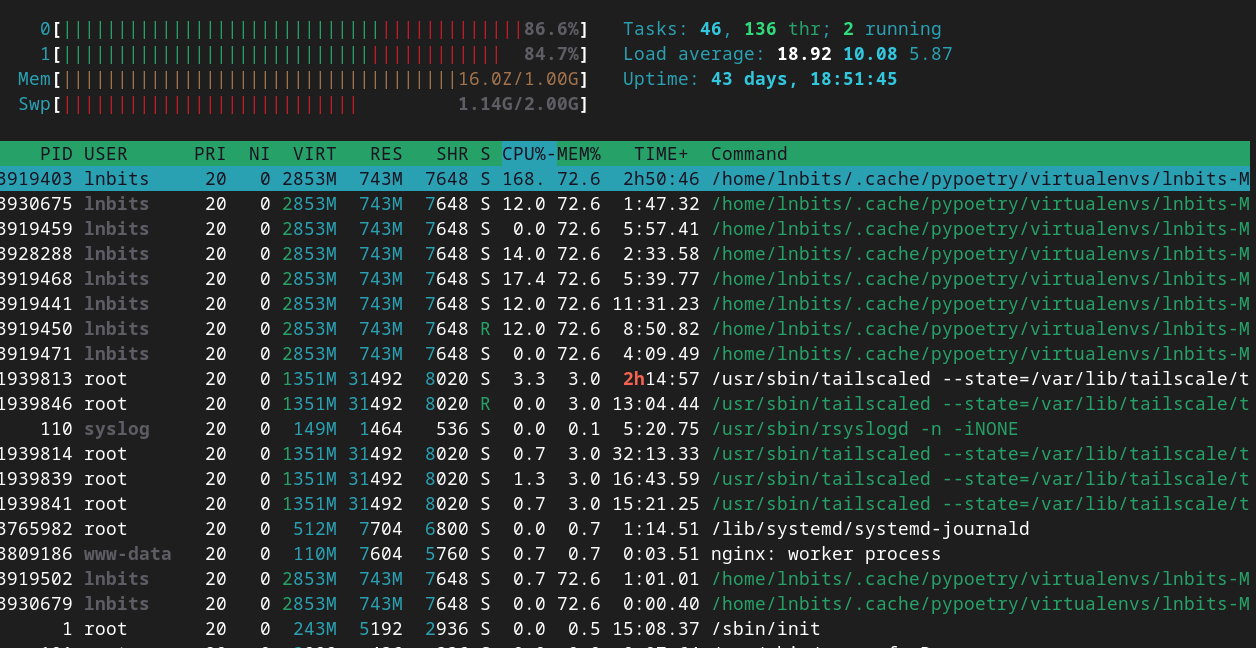

I also run it on a VPS and it is a bit much (together with the nostr client I use as a multiplexer) for single core and 1GB RAM.

Now will set it up on an RPi5 with 8GB RAM and 2TB NVMe and just carry on using it if it is not overloaded.

The tradeoff is that the calls will be tunneled to my self-hosted (but way more powerful) Raspiblitz instead of running directly on the VPS.

Currently I am using the #LNbits extension which adds a nice GUI to https://code.pobblelabs.org/nostr_relay/index (https://pypi.org/project/nostr-relay/)

It also readily available on the #Raspiblitz.

I also run it on a VPS and it is a bit much (together with the nostr client I use as a multiplexer) for single core and 1GB RAM.

Now will set it up on an RPi5 with 8GB RAM and 2TB NVMe and just carry on using it if it is not overloaded.

The tradeoff is that the calls will be tunneled to my self-hosted (but way more powerful) Raspiblitz instead of running directly on the VPS.