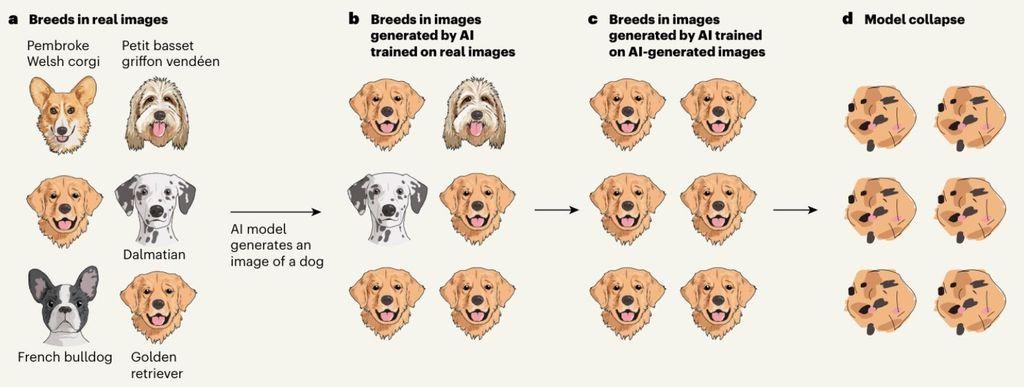

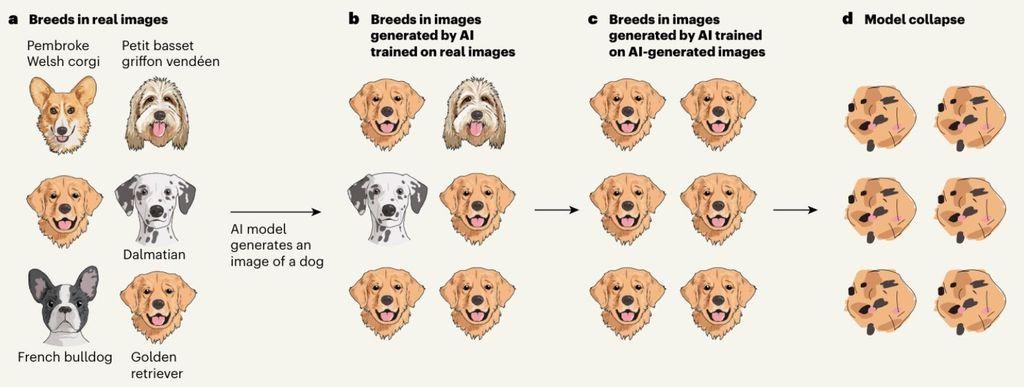

Jeroen Baert on Nostr: This is such a great illustration to explain model collapse: AI models training on ...

Published at

2024-12-08 10:05:02Event JSON

{

"id": "26b7be6bf18cb9d03fe690a87709ac683b2ffed1efd6c24096364481607b8513",

"pubkey": "5780b403349e4741fa1397cb62bc11394ef72ceba73d7f9fa41f471a31dd8aa9",

"created_at": 1733652302,

"kind": 1,

"tags": [

[

"imeta",

"url https://files.mastodon.social/media_attachments/files/113/616/637/197/787/598/original/7aab9e513455d4aa.jpeg",

"m image/jpeg",

"dim 1024x387",

"blurhash U9QvaiTLV@V?%gs.%2V@yseTozNH%MWCozWC"

],

[

"proxy",

"https://mastodon.social/users/jbaert/statuses/113616637308354529",

"activitypub"

]

],

"content": "This is such a great illustration to explain model collapse: AI models training on AI-generated data become worse over time. A challenge, because most companies training large models are running out of data (https://observer.com/2024/07/ai-training-data-crisis/) and increasingly rely on hybrid sets of original and synthetic data.\n\nNature article: https://www.nature.com/articles/s41586-024-07566-y\n\nhttps://files.mastodon.social/media_attachments/files/113/616/637/197/787/598/original/7aab9e513455d4aa.jpeg",

"sig": "d5b72d6ceeb10753ee49f65ffd60a0b3eebabd0a1246e8a0914e4acc0c3394154a769a7cde94e22dd9d5d1ad145c846d306fd2883095da0fef5596a09748fab8"

}