jb55 on Nostr: As performance optimization enjoyer i can’t help but look at the transformer ...

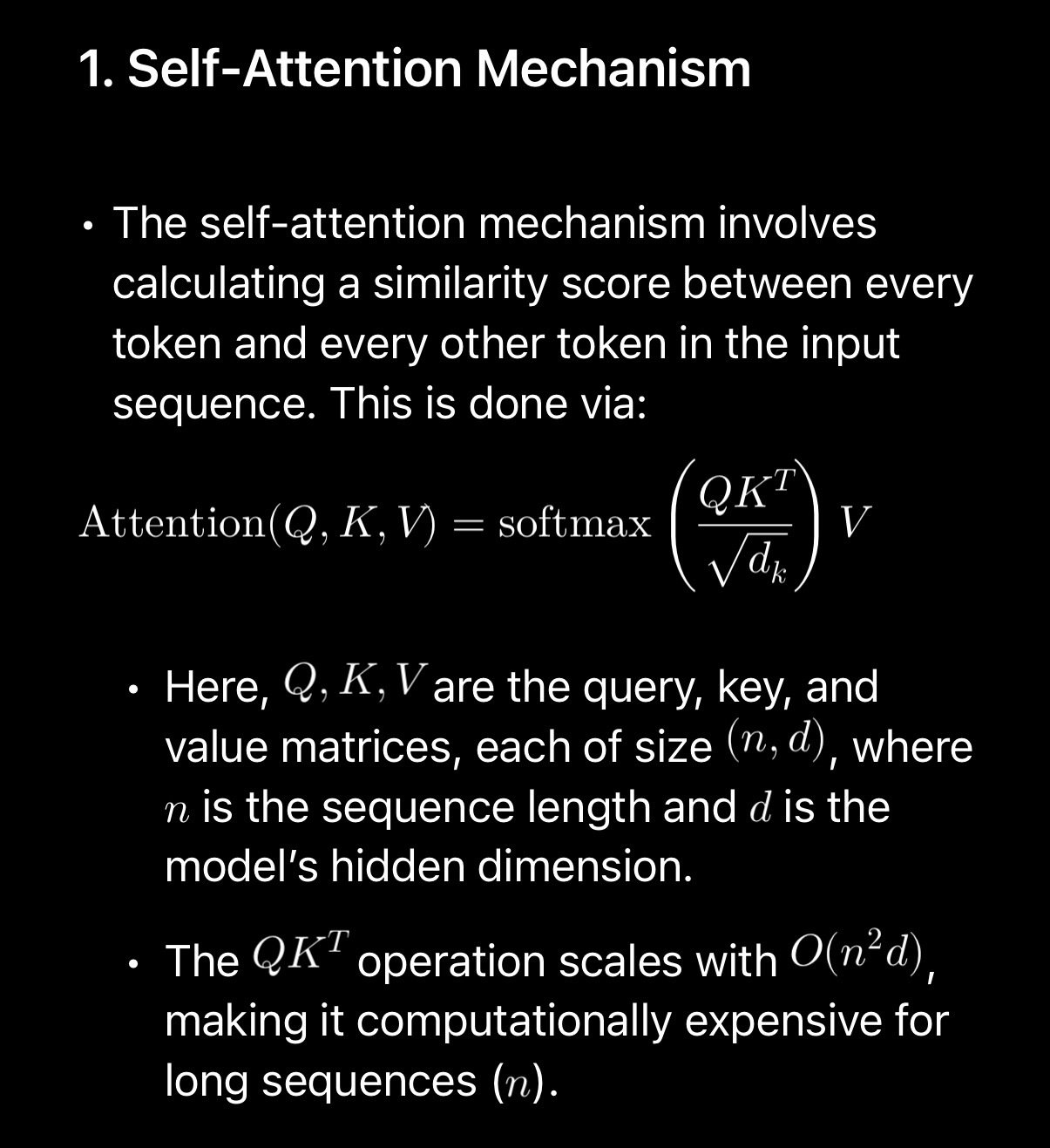

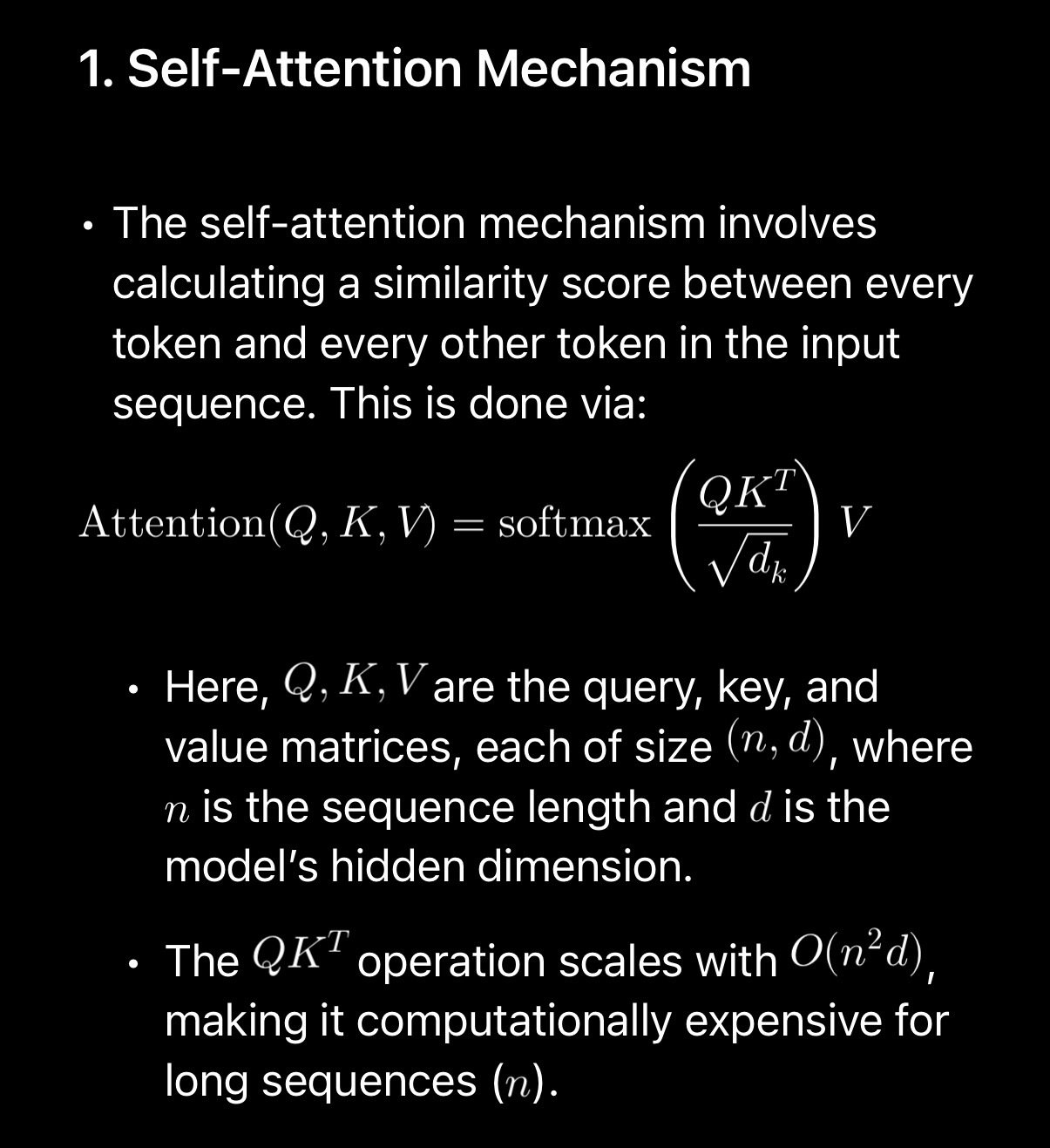

As performance optimization enjoyer i can’t help but look at the transformer architecture in LLMs and notice how incredibly inefficient they are, specifically the attention mechanism.

Looks like i am not the only one who has noticed this and it seems like people are working on it.

https://arxiv.org/pdf/2406.15786

Lots of ai researchers are not performance engineers and it shows. I suspect we can reach similar results with much less computational complexity. This will be good news if you want to run these things on your phone.

Looks like i am not the only one who has noticed this and it seems like people are working on it.

https://arxiv.org/pdf/2406.15786

Lots of ai researchers are not performance engineers and it shows. I suspect we can reach similar results with much less computational complexity. This will be good news if you want to run these things on your phone.