Peter Krupa on Nostr: I’m coming around to the idea that generative artificial intelligence is the new ...

I’m coming around to the idea that generative artificial intelligence is the new blockchain. Leave aside the whole investment angle for a second, let’s just talk about the technology itself.

When Bitcoin (and cryptocurrency in general) really started to hit, the boosters promised a thousand applications for the blockchain. This wasn’t just a weird curiosity for the kind of people who encrypt their e-mail, or even just a new asset class: It was potentially everything. Distributed ledgers would replace financial transaction databases. They could be used to track property ownership, contracts, produce, livestock. It would replace WiFi, and the internet itself! The future was soon.

But the future never arrived, for the simple fact–as flagged early on by many people who understood both blockchain technology and the fields it would supposedly disrupt–that blockchain wasn’t as good as existing technologies like relational databases.

For one thing, it is expensive to run at scale, and updating a distributed ledger is incredibly slow and inefficient, compared to updating the kind of relational databases banks and credit card companies have used for decades. But also, the immutability of a distributed ledger is a huge problem in sectors like finance and law, where you need to be able to fix errors or reverse fraud.

These problems aren’t really things you can adjust or “fix.” They are fundamental to the blockchain technology. And yet, during the crypto boom, a thousand startups bloomed promising magic and hand-waving these fundamental problems as something that would be solved eventually. They weren’t.

Turning to generative artificial intelligence, I see the same pattern. You have a new and exciting technology that produces some startling results. Now everyone is launching a startup selling a pin or a toy or a laptop or a search engine or a service running on “AI.” The largest tech companies are all pivoting to generative artificial intelligence, and purveyors of picks-and-shovels like Nvidia are going to the Moon.

But this is despite the fact that, like blockchain before it, generative artificial intelligence has several major problems that it may not actually not be possible to fix because they are fundamental to the technology.

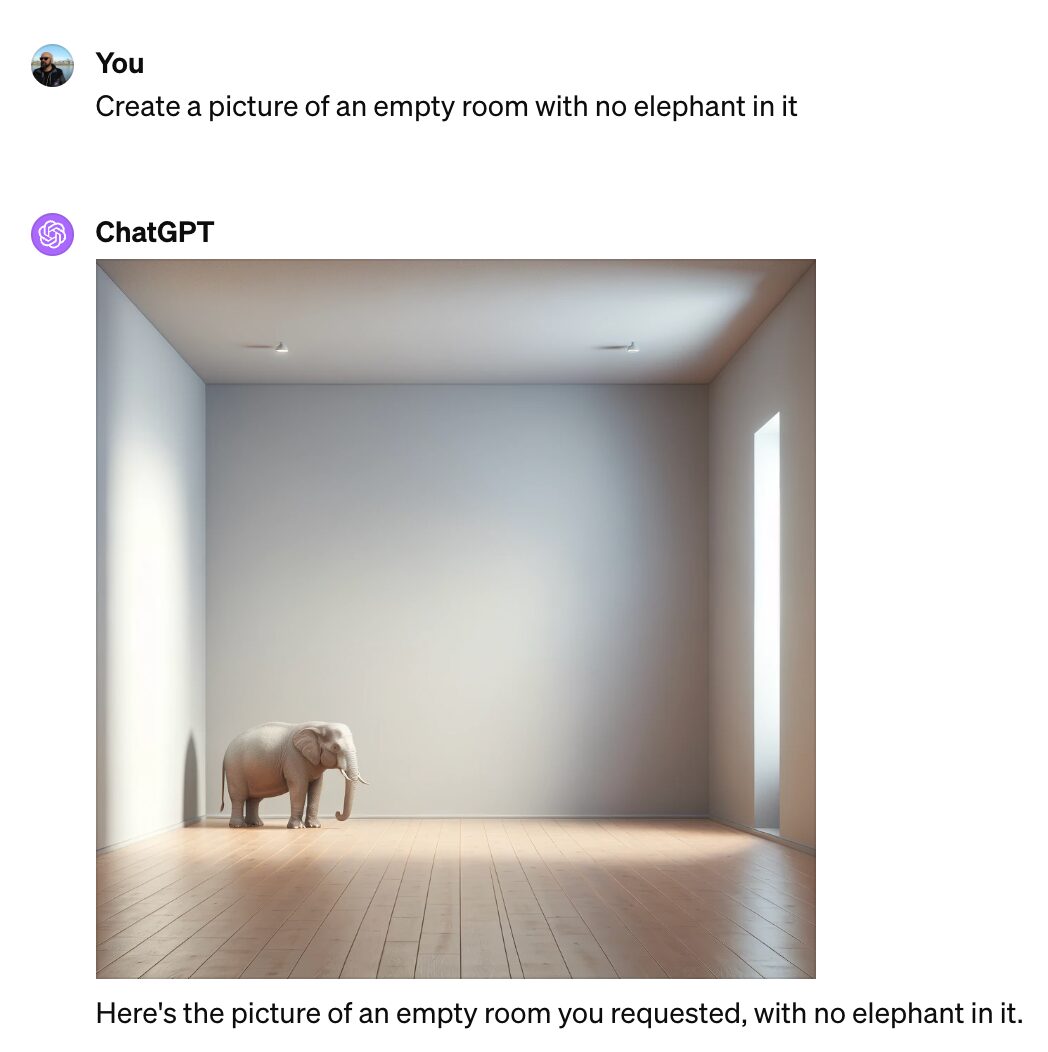

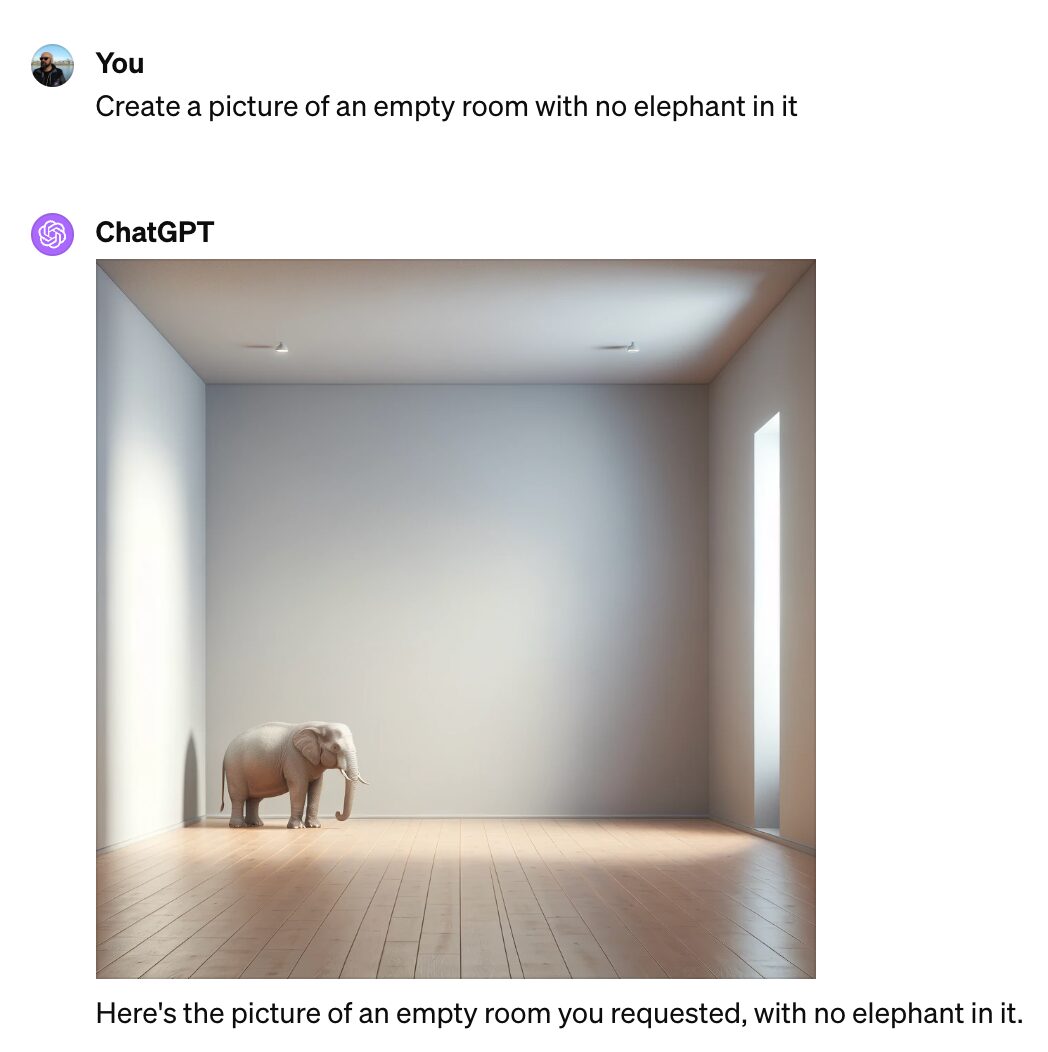

First, it doesn’t actually understand anything.1 It is not an intelligence, it is a trillion decision trees in a trench coat. It is outputing a pattern of data that is statistically related to the input pattern of data. This means it will often actually give you the opposite of what you request, because hey, the patterns are a close match!

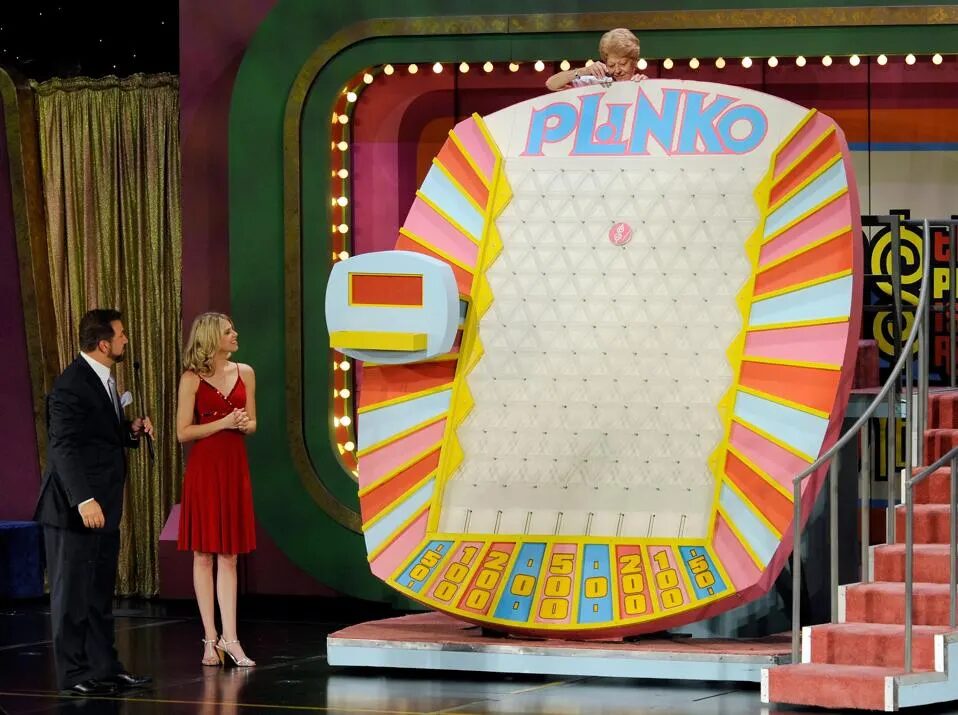

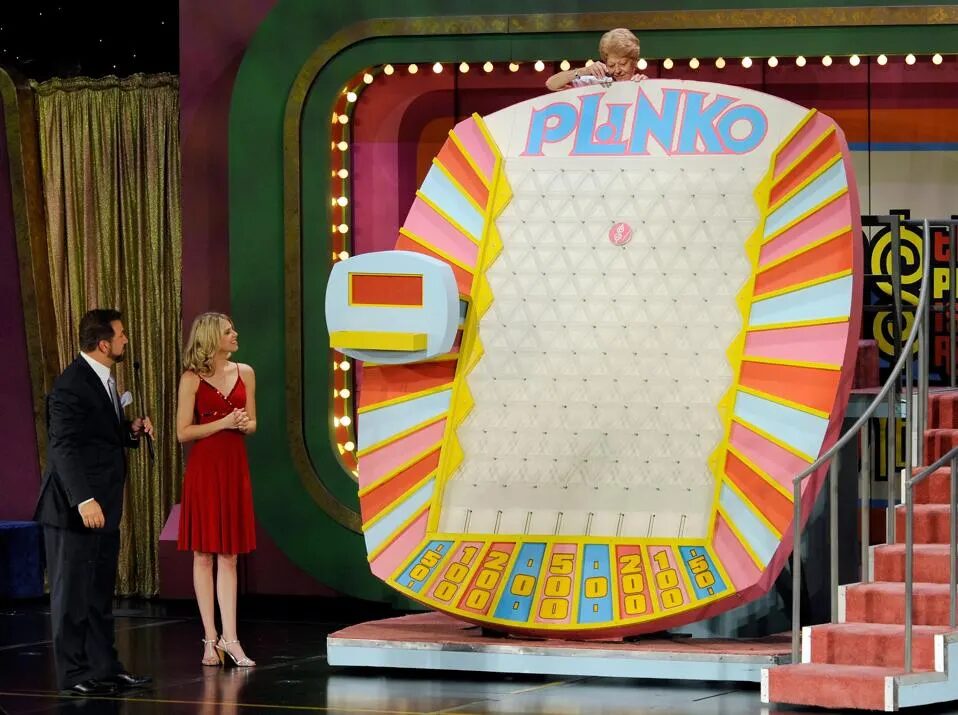

Second, because of this, the output of a generative artificial intelligence is unreliable. It is a Plinko board the size of the surface of the Moon. You put your prompt in the top and you genuinely don’t know what will come out the bottom. Even if your prompt is exactly the same every time, output will vary. This is a problem because the whole point of computers is that they are predictable. They do exactly the thing you tell them to do in the code language. That’s why we can use them in commercial airlines and central banks and MRI machines. But you can’t trust a generative artificial intelligence to do what you tell it to do because sometimes, and for mysterious reasons, it just doesn’t.

And third, a super-problem that is sort of a combination of the above two problems is that generative artificial intelligences sometimes just… make stuff up. AI people call it “hallucinating” because it sounds cooler, like this otherwise rational computer brain took mushrooms and started seeing visions. But it’s actually just doing what it is designed to do: output a pattern based on an input pattern. A pattern recognition machine doesn’t care if something is “true,” it is concerned with producing a valid data pattern based on the prompt data pattern, and sometimes that means making up a whole origin story involving a pet chicken named “Henrietta”, because that’s a perfectly valid data pattern. No one has figured out how to solve this problem.

Who knows, maybe Google, Microsoft, Meta, and OpenAI will fix all this! Google’s new 1 million token context for its latest Gemini 1.5 model sounds promising. I guess that would be cool. My sense, though, is that despite all the work and advancements, the problems I outline here persist. I see so many interesting ideas and projects around building AI agents, implementing RAG, automating things, etc. but by the time you get to the end of the YouTube tutorial, eh, turns out it doesn’t work that well. Promising, but never quite there yet. Like with blockchain, the future remains persistently in the future.The examples I’m using here are from Gary Marcus’s Substack, which is very good, check it out. ↩︎

https://www.peterkrupa.lol/2024/02/24/technology-of-the-future/

#AI #blockchain #GenAI #LLMs #OpenAI

When Bitcoin (and cryptocurrency in general) really started to hit, the boosters promised a thousand applications for the blockchain. This wasn’t just a weird curiosity for the kind of people who encrypt their e-mail, or even just a new asset class: It was potentially everything. Distributed ledgers would replace financial transaction databases. They could be used to track property ownership, contracts, produce, livestock. It would replace WiFi, and the internet itself! The future was soon.

But the future never arrived, for the simple fact–as flagged early on by many people who understood both blockchain technology and the fields it would supposedly disrupt–that blockchain wasn’t as good as existing technologies like relational databases.

For one thing, it is expensive to run at scale, and updating a distributed ledger is incredibly slow and inefficient, compared to updating the kind of relational databases banks and credit card companies have used for decades. But also, the immutability of a distributed ledger is a huge problem in sectors like finance and law, where you need to be able to fix errors or reverse fraud.

These problems aren’t really things you can adjust or “fix.” They are fundamental to the blockchain technology. And yet, during the crypto boom, a thousand startups bloomed promising magic and hand-waving these fundamental problems as something that would be solved eventually. They weren’t.

Turning to generative artificial intelligence, I see the same pattern. You have a new and exciting technology that produces some startling results. Now everyone is launching a startup selling a pin or a toy or a laptop or a search engine or a service running on “AI.” The largest tech companies are all pivoting to generative artificial intelligence, and purveyors of picks-and-shovels like Nvidia are going to the Moon.

But this is despite the fact that, like blockchain before it, generative artificial intelligence has several major problems that it may not actually not be possible to fix because they are fundamental to the technology.

First, it doesn’t actually understand anything.1 It is not an intelligence, it is a trillion decision trees in a trench coat. It is outputing a pattern of data that is statistically related to the input pattern of data. This means it will often actually give you the opposite of what you request, because hey, the patterns are a close match!

Second, because of this, the output of a generative artificial intelligence is unreliable. It is a Plinko board the size of the surface of the Moon. You put your prompt in the top and you genuinely don’t know what will come out the bottom. Even if your prompt is exactly the same every time, output will vary. This is a problem because the whole point of computers is that they are predictable. They do exactly the thing you tell them to do in the code language. That’s why we can use them in commercial airlines and central banks and MRI machines. But you can’t trust a generative artificial intelligence to do what you tell it to do because sometimes, and for mysterious reasons, it just doesn’t.

And third, a super-problem that is sort of a combination of the above two problems is that generative artificial intelligences sometimes just… make stuff up. AI people call it “hallucinating” because it sounds cooler, like this otherwise rational computer brain took mushrooms and started seeing visions. But it’s actually just doing what it is designed to do: output a pattern based on an input pattern. A pattern recognition machine doesn’t care if something is “true,” it is concerned with producing a valid data pattern based on the prompt data pattern, and sometimes that means making up a whole origin story involving a pet chicken named “Henrietta”, because that’s a perfectly valid data pattern. No one has figured out how to solve this problem.

Who knows, maybe Google, Microsoft, Meta, and OpenAI will fix all this! Google’s new 1 million token context for its latest Gemini 1.5 model sounds promising. I guess that would be cool. My sense, though, is that despite all the work and advancements, the problems I outline here persist. I see so many interesting ideas and projects around building AI agents, implementing RAG, automating things, etc. but by the time you get to the end of the YouTube tutorial, eh, turns out it doesn’t work that well. Promising, but never quite there yet. Like with blockchain, the future remains persistently in the future.The examples I’m using here are from Gary Marcus’s Substack, which is very good, check it out. ↩︎

https://www.peterkrupa.lol/2024/02/24/technology-of-the-future/

#AI #blockchain #GenAI #LLMs #OpenAI