THE MARTYR OF BUTLERIAN JIHAD on Nostr: Just so that you know, I'm writing this post in Emacs and I still hate GNU, GNUtards, ...

Just so that you know, I'm writing this post in Emacs and I still hate GNU, GNUtards, and all that these cockfaced niggers stand for.

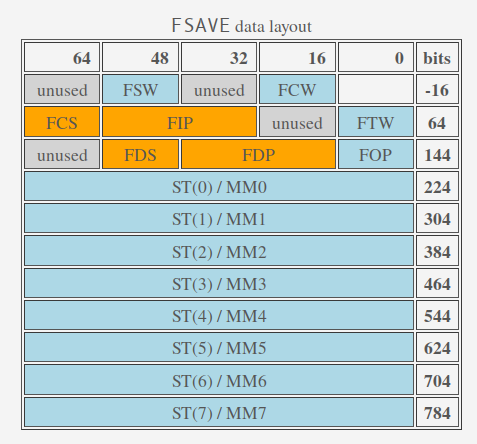

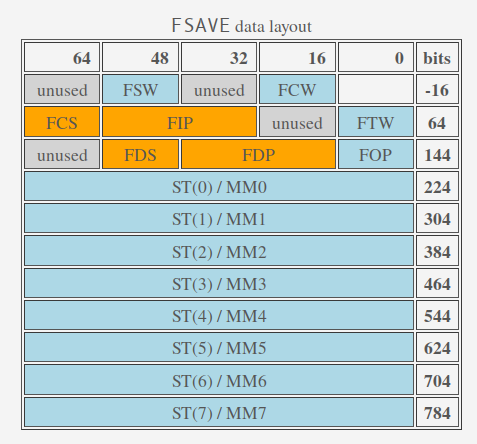

One of the most famous inventions of GNU, right after the RMS foot diet and interjections, is GDB aka the only known debugger for L'Eunuchs systems in the known universe (shut up LLDB fans!), and this is what I would like to talk about today. A long time ago, when computers were slow, your mom was beautiful and not fat, Intel created their famous x86 line of CPUs. All was good, but then somehow they decided that this shit would need floating point support, and hence x87 was born. One of the commands that x87 provided was FSAVE, which took a pointer and saved the current FPU state (pic. 1) into the corresponding memory. So far so good.

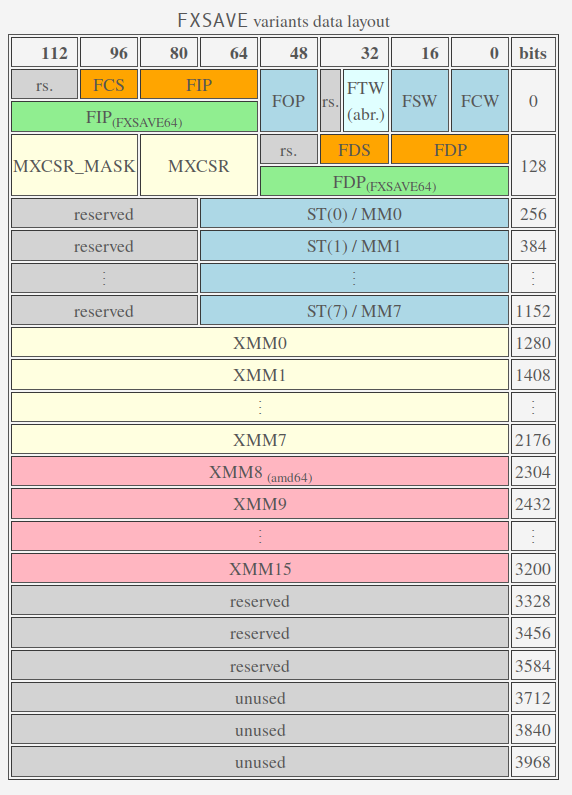

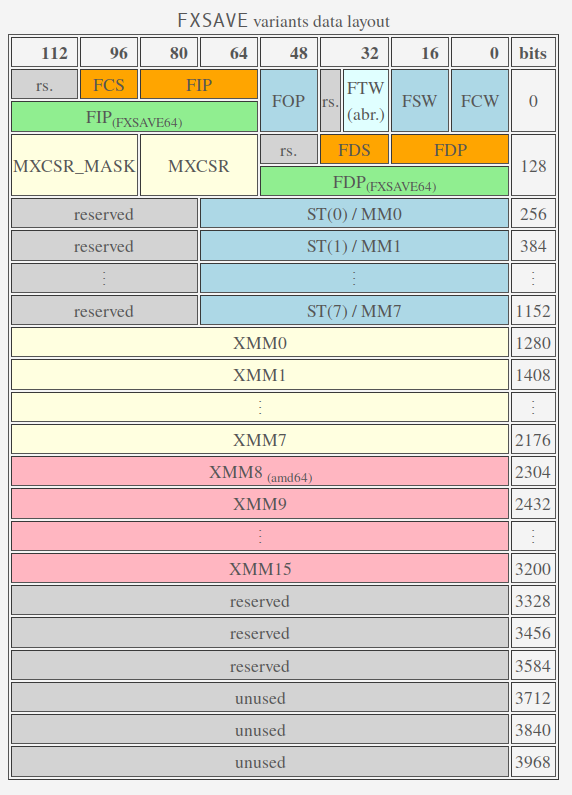

Fast forward some 20 years, Intel comes up with MMX and later SSE bullshit instructions, that also can be used for floating point operations. Since the FSAVE data layout is set in stone and Intel cherishes backwards compability more than a typical GNUtard cherishes his elderly virginity, SSE extension brings FXSAVE commands. Which works basically the same way as FSAVE, but saves much more data (pic. 2), including SSE registers. So far so good, again.

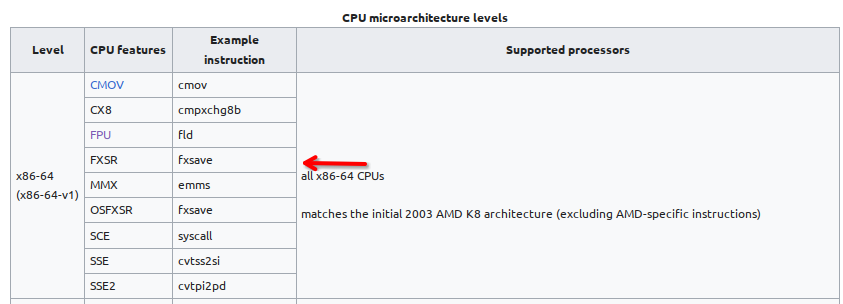

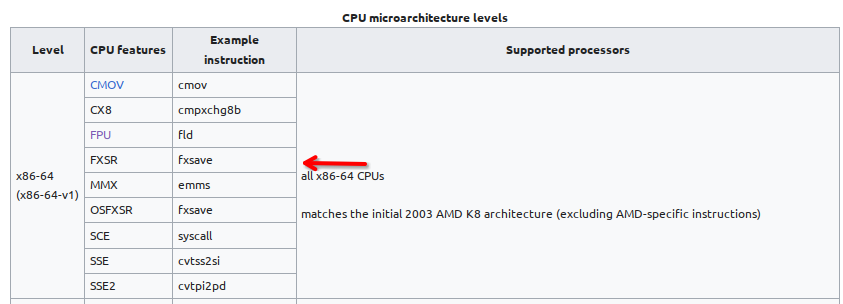

Advance a few more years, AMD rolls out their brand new and cool AMD64 architecture, with Intel soon following the suit. AMD64 (or x86_64) adds a bunch of new registers, etc etc. But what is more important to our discussion here, every x86_64 CPU includes support for SSE and FXSAVE op (pic. 3). As far as I know, every OS today uses FXSAVE when in 64-bit mode. Because there is no reason not to. Also because FSAVE modifies FPU state and FXSAVE doesn't, so it only makes sense to use FXSAVE for context switches and what not.

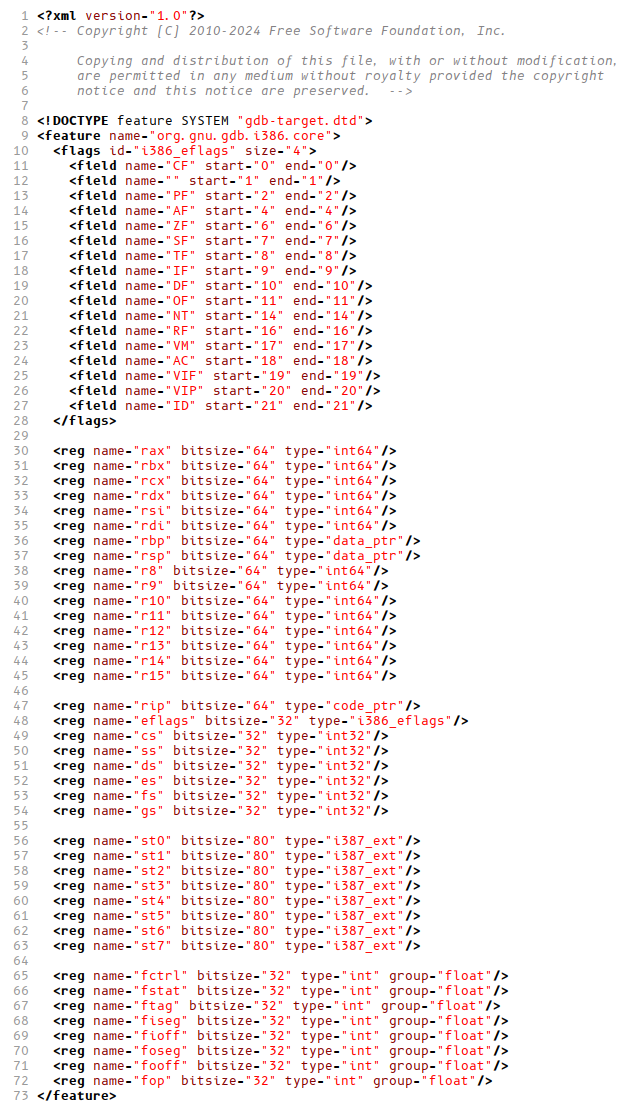

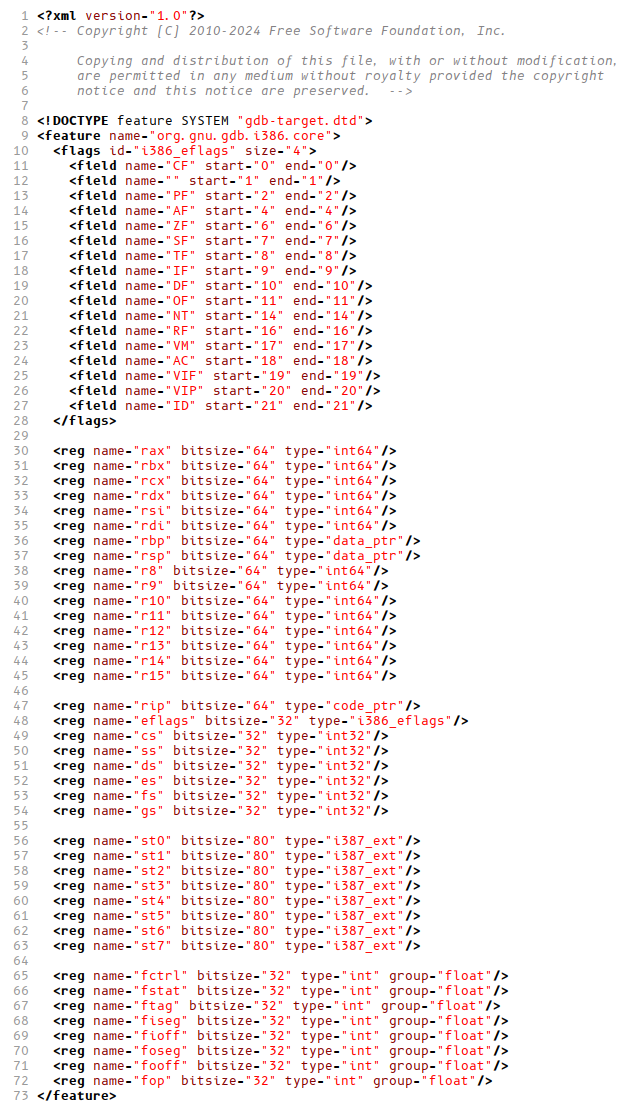

Now enter GDB. GDB supports remote debugging and provides its very own protocol for this. Any system can implement a GDB debug server and have a debugger attached. L'Eunuchs kernel does this, for example. You can happily debug your kernel with gdb over serial or ethernet connections. For every architecture, GDB defines a core set of registers in its protocol. For x86(_32), this includes x87 FPU registers, mirroring FSAVE data layout. Mostly because every x86 CPU that can run a GDB server probably has FPU and nobody cares about your ancient i386. This is fine. What is not fine is that for x86_64, the procotol also includes the same FSAVE data (pic. 4, also https://sourceware.org/git/?p=binutils-gdb.git;a=blob;f=gdb/features/i386/64bit-core.xml;h=5cbae2c0ef489699fa6c6e8ed07f5fe6d3b8a6b6;hb=HEAD). Not FXSAVE, just FSAVE.

How might this be a problem, one could ask? Again, no x86_64 system - as far as I'm aware - today uses FSAVE because there is literally no reason to, other than for compatibility with GDB wire protocol. FXSAVE format is a superset of FSAVE format, but the included data is slightly different. For one, both include tags but they are calculated in different manners and thus some non-trivial conversion code must be implemented. All because SOME FUCKING GNUTARD CUNT DECIDED TO JUST COPY-PASTE A PIECE OF SPEC FROM THE OLDER ARCHITECTURE TO THE NEW ONE WITHOUT EVEN BOTHERING TO THINK ABOUT WHAT HE WAS DOING! Fuck I hate GNU niggers. Total and complete disgrace for the programming community.

One of the most famous inventions of GNU, right after the RMS foot diet and interjections, is GDB aka the only known debugger for L'Eunuchs systems in the known universe (shut up LLDB fans!), and this is what I would like to talk about today. A long time ago, when computers were slow, your mom was beautiful and not fat, Intel created their famous x86 line of CPUs. All was good, but then somehow they decided that this shit would need floating point support, and hence x87 was born. One of the commands that x87 provided was FSAVE, which took a pointer and saved the current FPU state (pic. 1) into the corresponding memory. So far so good.

Fast forward some 20 years, Intel comes up with MMX and later SSE bullshit instructions, that also can be used for floating point operations. Since the FSAVE data layout is set in stone and Intel cherishes backwards compability more than a typical GNUtard cherishes his elderly virginity, SSE extension brings FXSAVE commands. Which works basically the same way as FSAVE, but saves much more data (pic. 2), including SSE registers. So far so good, again.

Advance a few more years, AMD rolls out their brand new and cool AMD64 architecture, with Intel soon following the suit. AMD64 (or x86_64) adds a bunch of new registers, etc etc. But what is more important to our discussion here, every x86_64 CPU includes support for SSE and FXSAVE op (pic. 3). As far as I know, every OS today uses FXSAVE when in 64-bit mode. Because there is no reason not to. Also because FSAVE modifies FPU state and FXSAVE doesn't, so it only makes sense to use FXSAVE for context switches and what not.

Now enter GDB. GDB supports remote debugging and provides its very own protocol for this. Any system can implement a GDB debug server and have a debugger attached. L'Eunuchs kernel does this, for example. You can happily debug your kernel with gdb over serial or ethernet connections. For every architecture, GDB defines a core set of registers in its protocol. For x86(_32), this includes x87 FPU registers, mirroring FSAVE data layout. Mostly because every x86 CPU that can run a GDB server probably has FPU and nobody cares about your ancient i386. This is fine. What is not fine is that for x86_64, the procotol also includes the same FSAVE data (pic. 4, also https://sourceware.org/git/?p=binutils-gdb.git;a=blob;f=gdb/features/i386/64bit-core.xml;h=5cbae2c0ef489699fa6c6e8ed07f5fe6d3b8a6b6;hb=HEAD). Not FXSAVE, just FSAVE.

How might this be a problem, one could ask? Again, no x86_64 system - as far as I'm aware - today uses FSAVE because there is literally no reason to, other than for compatibility with GDB wire protocol. FXSAVE format is a superset of FSAVE format, but the included data is slightly different. For one, both include tags but they are calculated in different manners and thus some non-trivial conversion code must be implemented. All because SOME FUCKING GNUTARD CUNT DECIDED TO JUST COPY-PASTE A PIECE OF SPEC FROM THE OLDER ARCHITECTURE TO THE NEW ONE WITHOUT EVEN BOTHERING TO THINK ABOUT WHAT HE WAS DOING! Fuck I hate GNU niggers. Total and complete disgrace for the programming community.