iefan 🕊️ on Nostr: Llama 3.2 3b fine-tuned model running locally on device offline, at around 10 ...

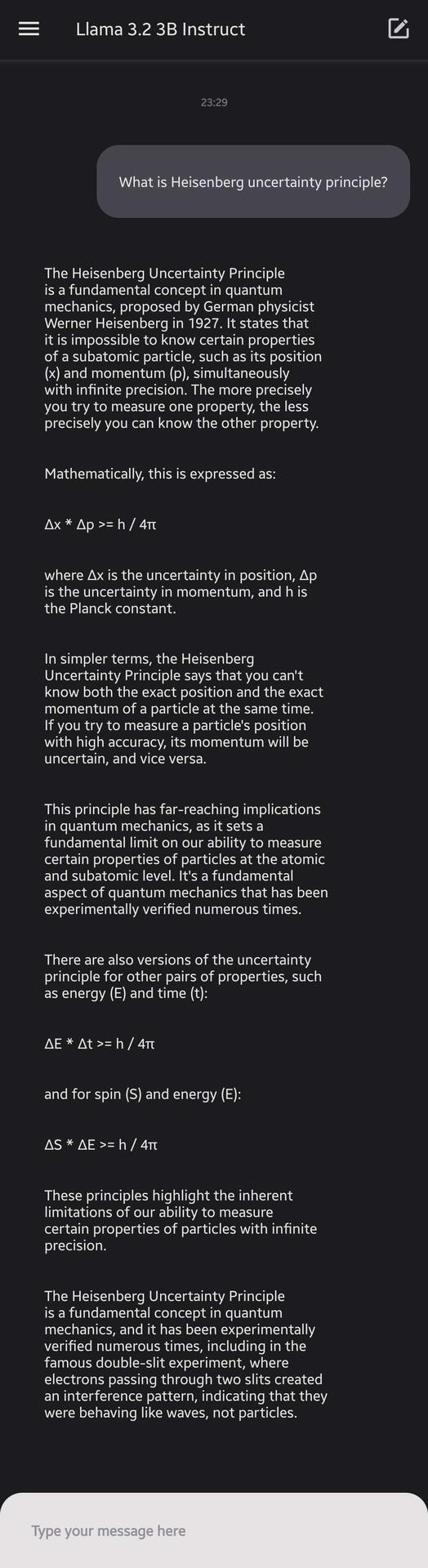

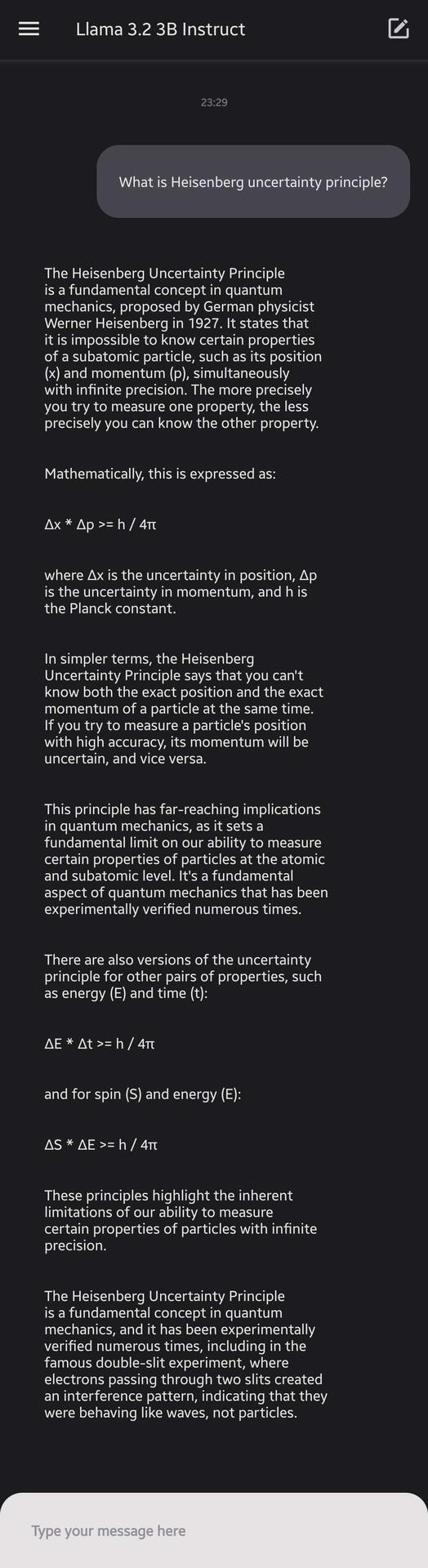

Llama 3.2 3b fine-tuned model running locally on device offline, at around 10 tokens/sec. 👀

quoting nevent1q…xdtyFrom all this AI saga, all I want is a general-purpose open-source LLM that is efficient enough to run locally on a phone, > 10 tokens/sec and smart enough to handle basic tasks like answering questions and reading PDFs with contextual understanding.

No AGI, nothing, just a model, Light enough to run on a device locally, yet smart enough to handle essential tasks, that’s all I need, I'm a simple man.